The only drawback with the Automatic option is that you cannot easily tell whether a virtual machine is using hardware or software 3D rendering. When to Choose the Hardware Option The Hardware option guarantees that every virtual machine in the pool uses hardware 3D rendering, provided that GPU resources are available on the ESXi hosts. Software rendering produces images of the highest quality, letting you achieve the most sophisticated results. Computation occurs on the CPU, as opposed to hardware rendering, which relies on the machine’s graphics card. Because it is not restricted by the computer's graphics card, software rendering generally is more flexible. Software Rendering, could also be looked at as 'general purpose' it's just grabbing whatever processing power you have and working. GPU Rendering GPUs are simply graphics cards with processing built in. Common graphics includes GeForce, AMD Radeon & the gold standard in professional video applications NVIDIA Quadro.

Software rendering

Software rendering produces images of the highest quality, letting you achieve the most sophisticated results.

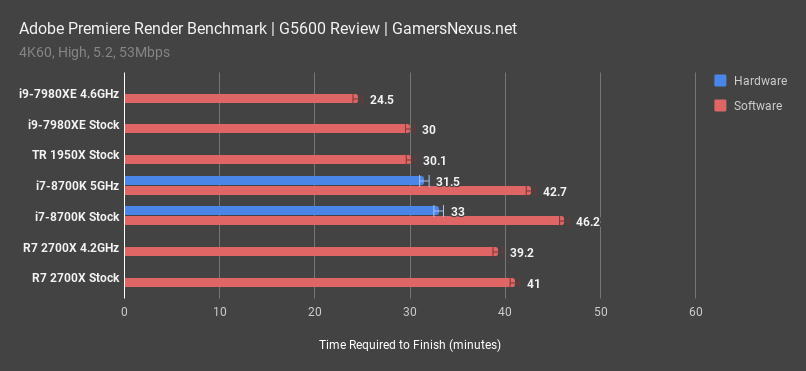

Computation occurs on the CPU, as opposed to hardware rendering, which relies on the machine’s graphics card. Because it is not restricted by the computer’s graphics card, software rendering generally is more flexible. The trade-off, however, is that software rendering is generally more time consuming.

Exactly what you can render depends on which software renderer you use and its particular limitations.

Maya has the following software renderers:

- The Maya software renderer

- NVIDIA® mental ray® for Maya®

Hardware rendering

Hardware rendering uses the computer’s video card and drivers installed on the machine to render images to disk. Hardware rendering is generally faster than software rendering, but typically produces images of lower quality compared to software rendering. In some cases, however, hardware rendering can produce results good enough for broadcast delivery.

Software Vs Hardware Rendering Device

Hardware rendering cannot produce some of the most sophisticated effects, such as some advanced shadows, reflections, and post-process effects. To produce these kind of effects, you must use software rendering.

Maya has the following hardware renderer:

- The Maya hardware renderer

More Information

Ideally, software rendering algorithms should be translatable directly to hardware. However, this is not possible because hardware and software rendering use two very different approaches:

- Software rendering holds the 3D scene to be rendered (or the relevant portions of it) in memory, and samples it pixel by pixel or subpixel by subpixel. In other words, the scene is static and always present, but the renderer deals with one pixel or subpixel at a time.

- Hardware rendering operates the opposite way. All pixels are present at all times, but rendering consists of considering the scene one triangle at a time, “painting” each one into the frame buffer. The hardware has no notion of a scene; only a single triangle is known at any one time.

Software is completely unconstrained, except by performance considerations, and can perform any algorithm whatever. The subpixel loop method is chosen because it allows rendering nonlocal effects that require considering different portions of the scene in order to compute a single pixel or subpixel. For example, reflections require access to both the reflecting and the reflected object. A more complex example is global illumination, which considers the indirect light from all surrounding objects to compute the brightness of the subpixel being rendered. Hardware can do none of this because it only ever knows one triangle at a time, and has no notion of other objects.

Software Vs Hardware Rendering Software

Hardware rendering uses a limited range of workarounds to address some of these limitations. These workarounds typically involve pre-rendering objects into “maps”, which are rectangular pixel rectangles encoding properties of other objects and are stored in the graphics hardware in the form of texture images. While graphics hardware cannot deal with multiple objects, it can deal very efficiently with texture images. Examples for such mapping techniques are:

- Shadow mapping renders the scene from the viewpoint of each light source, recording only the depth of the frontmost object. This can later be used during final rendering to decide whether a point is in shadow, if it is farther away from the light than the recorded depth. This approach does not work well for area lights that cast soft shadows (shadows with fuzzy edges), and it cannot handle transparent objects such as stained glass or smoke.

- Reflection maps render the scene from the viewpoint of a mirror. This image is then “pasted in” when the final render needs to know what is seen in the mirror. This works only for flat or near-flat mirrors.

- Environment maps contain a hemispherical or otherwise complete view of the surroundings as seen from the rendered scene. Nearby objects are not normally included. Although environment maps represent the scene poorly, and cannot capture the arrangement of nearby objects at reasonable computational cost, they are acceptable for highly curved reflectors such as chrome trims.

Software Vs Hardware Rendering

Workarounds like this have one difficulty: they require a lot of manual preparation and fine-tuning to work; for example, deciding on a good environment map. The computer cannot do this because while computing a correct map is possible, it would be very expensive and easily defeat the expected time savings.

The driving force behind graphics hardware design is gaming, which involves rendering as many triangles per second as possible to a video screen. Also, procedural shading is still quite primitive and requires workarounds as described above. This creates some limitations of hardware rendering:

What's The Best Rendering Software

- Rendered images go to a video screen. Retrieving them to the main memory for further processing is often poorly optimized and slow. This can be the main factor limiting frame rates.

- To achieve full speed, the entire renderer must be carefully hand-crafted to the scene being rendered. This cannot be done for a general-purpose renderer.

- Many effects beside the mapping workarounds described above require rendering the scene multiple times because the complexity of the effect exceeds the capacity of the hardware. This is called “layering” and described in more detail below. This reduces the achievable frame rates.

- Multiple frame buffers are unavailable. Rendering to video only requires RGBA video with low bit depth and a depth (Z) buffer. General-purpose production rendering requires many frame buffers with high bit depths.

- Oversampling to reduce “staircase” aliasing is generally slow and has poor quality. The subpixels computed are inaccessible; only the final pixel is available.

- There is no good approach to motion blurring. This is critically important for film production.

- Textures must be stored in the video hardware before use. The available memory is usually 256 or 512 MB. Film production often uses gigabytes of textures, which requires very slow paging.

- Compatibility between the hardware from different vendors, and even between successive hardware generations from the same vendor, is very poor. Although OpenGL hides many differences, the most advanced features and the fastest speed paths generally have not found an abstraction in OpenGL yet. Hardware development cycles are extremely rapid.

None of this is mentioned in the literature and sales material for graphics hardware boards. In general, it is unrealistic to expect to attain advertised realtime frame rates, often over 100 frames per second, for general-purpose rendering without spending the years of custom development that game designers generally require.

Yet, despite all the limitations of hardware rendering, the things it can do are done at extremely high speed, sometimes more than a thousand times faster than software. Harnessing this speed is the object of the hardware shading support in mental ray 3.3. Although the limitations make it impossible to simply switch from software to hardware rendering, much of the advantage can be realized with an appropriate combination of hardware rendering and software rendering, combining hardware speed with software flexibility. This document describes how mental ray does this.

Source: Autodesk Maya 2013 Help Documentation

Autodesk Maya 2011 Help Documentation